OpenAI, the research lab known for its groundbreaking work in artificial intelligence, has unveiled a new marvel: Sora, which has sent shockwaves through the world of AI, sparking both awe and concern.

This groundbreaking model brings text-to-video generation to a whole new level, allowing users to conjure up realistic and detailed videos with just a few words. Let’s delve into the world of Sora, exploring its capabilities, limitations, potential applications, and potential impact.

NOTE: All Videos and Images on this page belong to their respective owners (OpenAi)

What is Sora?

Sora is a sophisticated diffusion model, meaning it starts with static noise and gradually refines it into a video based on a text prompt. It possesses an uncanny ability to grasp the complex nuances of natural language, accurately translating words into visually compelling scenes. Whether you describe a bustling cityscape, a fantastical creature in a mystical forest, or even a cat doing backflips on a treadmill, Sora can bring it to life with remarkable fidelity.

Sora serves as a foundation for models that can understand and simulate the real world, a capability we believe will be an important milestone for achieving AGI

– OpenAi

What are the key features of OpenAi’s Sora?

- High-quality videos: Sora generates impressively realistic videos, often surpassing the quality of earlier text-to-video models. Its attention to detail, including accurate physics and object relationships, creates a convincing visual experience.

- Complex scene understanding: The model goes beyond simply rendering individual objects. It grasps the relationships between elements, creating scenes with depth, movement, and context. From bustling crowds to intricate interactions, Sora can handle it all.

- Long-term consistency: Unlike some models that struggle with character continuity, Sora ensures objects maintain their appearance even when obscured or temporarily out of frame. This adds to the overall realism and coherence of the generated videos.

- Scalability: Sora’s transformer architecture, similar to GPT models, allows for efficient scaling and future improvements. This opens doors for even more complex and realistic video generation in the future.

How is Sora different from other Text-To-Video models and what are its capabilities?

It has mind-blowing capabilities as mentioned in OpenAi’s Sora technical research.

- Long Video Generations: With Sora, users can generate videos up to one minute long, incorporating detailed scenes and multiple characters.

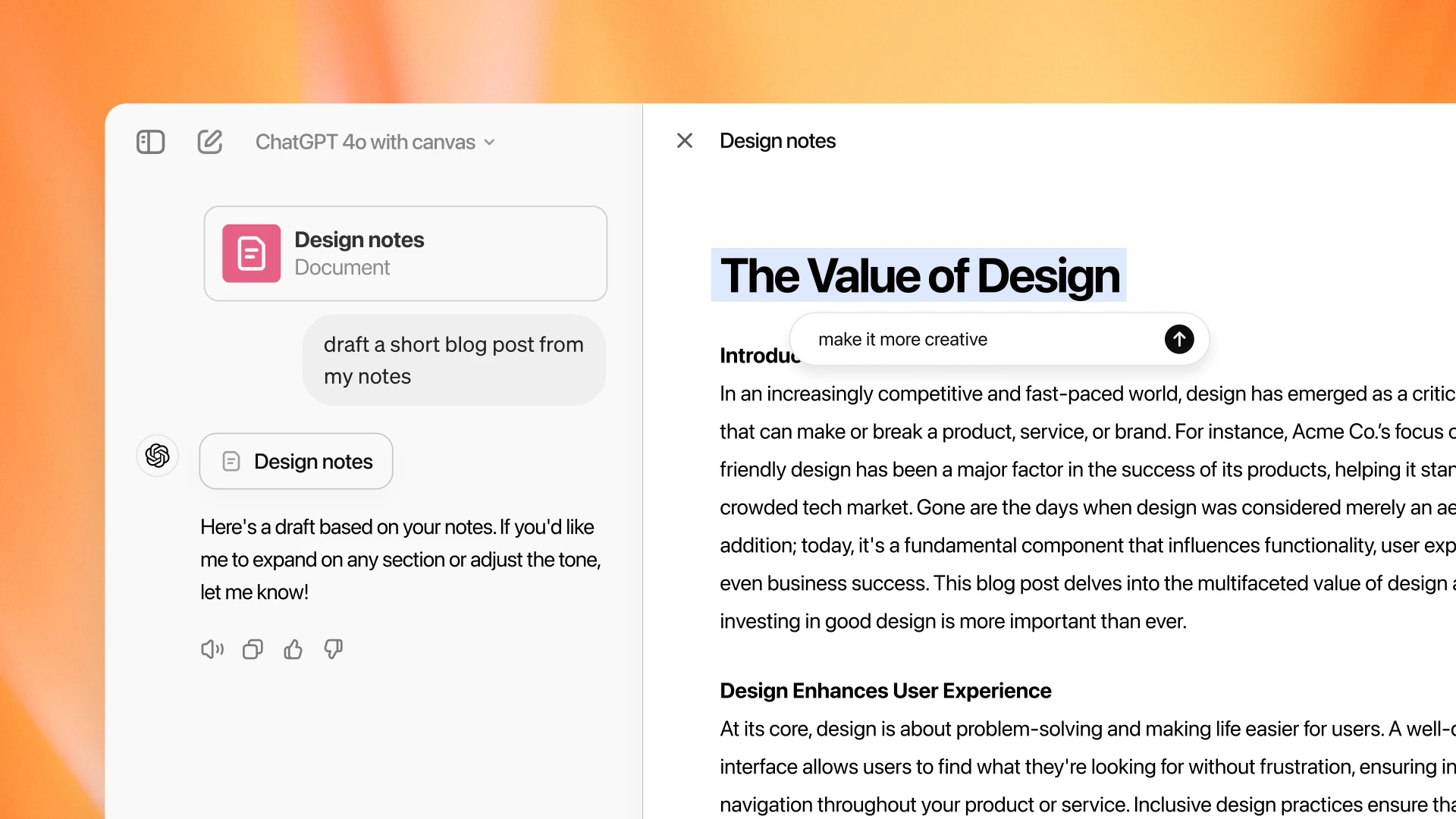

- Short Prompts: Similar to DALL·E 3, it also leverages GPT to turn short user prompts into longer detailed captions that are sent to the video model. This enables Sora to generate high-quality videos that accurately follow user prompts.

- Different Input Support: it can also be prompted with other inputs, such as pre-existing images or video. This capability enables Sora to perform a wide range of image and video editing tasks—creating perfectly looping videos, animating static images, extending videos forwards or backward in time, etc.

- Text-To-Image Support: it is also capable of generating images. The model can generate images of variable sizes – up to 2048×2048 resolution.

- Interacting with the world: it can sometimes simulate actions that affect the state of the world in simple ways like leaving a bite mark on a burger when a person eats it.

Animating images

Video-to-video editing

How to access OpenAi’s Sora?

Currently, Sora is not publicly available. Only select individuals like testers and some visual artists, designers, and filmmakers can access the model, primarily for research and development purposes, to gain feedback on how to advance the model to be most helpful for creative professionals.

What are the limitations and concerns regarding Sora?

Despite its impressive capabilities, Sora is not without its limitations and potential drawbacks:

- Potential for misuse: Like any powerful tool, Sora could be misused to create harmful or misleading content. OpenAI research team is working on watermarking technologies to flag AI-generated content and developing safeguards to prevent the creation of harmful or offensive videos, but the ethical implications of such technology remain crucial considerations.

- Bias and factual inaccuracies: As with other AI models, there’s a risk of biases reflecting the training data being incorporated into the generated videos. Additionally, factual inaccuracies might be present if the model misinterprets the prompt.

- Inaccurate Simulations: The current model has weaknesses. It may struggle with accurately simulating the physics of a complex scene, and may not understand specific instances of cause and effect. It may struggle with precise descriptions of events that take place over time.

What is possible with OpenAi’s Sora?

The possibilities unlocked by Sora are vast:

- Visual storytelling: Create animated shorts, music videos, or even film trailers based on your imagination.

- Product demonstrations: Showcase products or concepts in engaging and interactive video formats.

- Educational simulations: Bring complex subjects to life with immersive and visually compelling experiences.

- Accessibility: Generate alternative video descriptions for people with visual impairments.

What is the future of Text-to-Video Generation?

Sora marks a significant leap forward in text-to-video generation, blurring the lines between imagination and reality. While ethical considerations and responsible development are paramount, the potential applications of this technology are vast. From democratizing animation and filmmaking to revolutionizing education and entertainment, Sora’s journey has just begun.

OpenAI has been developing generative AI tools at a breakneck pace ever since it launched ChatGPT in November 2022. Since then, we’ve seen the release of GPT-4, voice and image prompts, and the new DALL-E 3 image model, all available via ChatGPT and now 2024 starts with Sora.